What is ETL (Extract, Transform, Load)?

Discover the essentials of ETL (Extract, Transform, Load) in data processing.

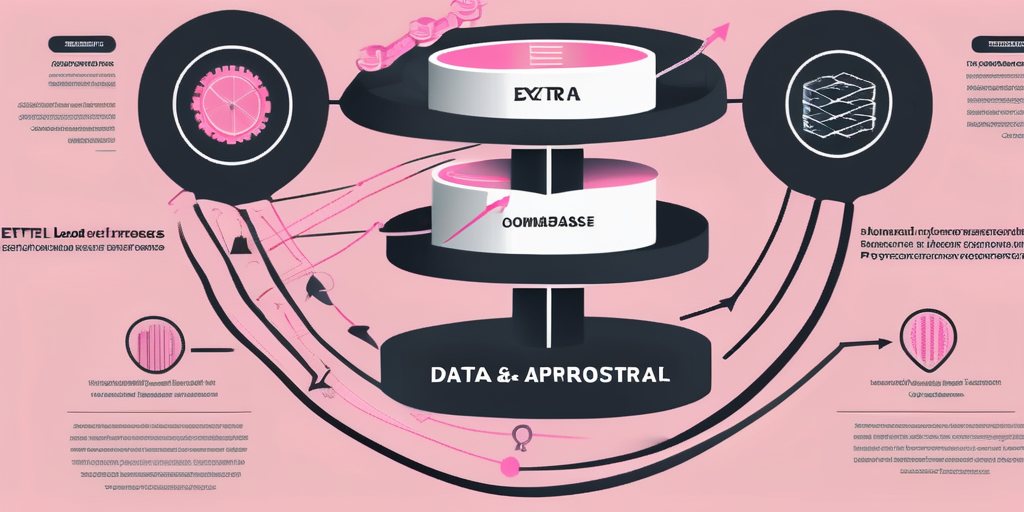

ETL, which stands for Extract, Transform, Load, is a crucial process in data integration and management. It serves as a backbone for various data-related applications, particularly in data warehousing and business intelligence. By systematically extracting data from multiple sources, transforming it into a suitable format, and loading it into a target system, ETL facilitates effective data analysis and decision-making.

Understanding the Basics of ETL

Definition of ETL

ETL is a data processing framework that enables organizations to gather data from different sources, manipulate it as needed, and store it in a centralized location. The 'Extract' phase involves pulling data from disparate databases and sources, which may include cloud applications, on-premises databases, flat files, or external APIs. The 'Transform' phase refers to cleaning, normalizing, and enriching that data, ensuring it is ready for analysis and reporting. Finally, the 'Load' phase sees the prepared data being moved into a target system such as a data warehouse or a data mart.

This process is integral to operational efficiency, as it streamlines the data handling process and ensures that high-quality data is readily available for decision-making. Organizations can utilize ETL to consolidate their data silos and gain comprehensive insights. Furthermore, the ETL process can be automated to enhance speed and accuracy, allowing businesses to respond more swiftly to market changes and customer needs. By leveraging ETL tools, organizations can also schedule regular updates, ensuring that their data remains current and relevant, which is crucial in today’s fast-paced business environment.

The Importance of ETL in Data Management

Implementing ETL processes is essential for organizations aiming to make data-driven decisions. As data continues to grow in volume and complexity, an effective ETL strategy enables businesses to harness that data efficiently. ETL assists in maintaining data quality, enhancing consistency, and increasing reliability, which are all vital for business operations.

Moreover, with ETL, organizations can ensure data governance and compliance with regulatory standards. Accurate and well-managed data can lead to better customer insights, improved operational efficiency, and ultimately, increased profitability. The importance of ETL extends beyond just data management; it also plays a critical role in analytics and business intelligence initiatives. By transforming raw data into actionable insights, organizations can identify trends, forecast future outcomes, and make informed strategic decisions. Additionally, as businesses increasingly adopt cloud technologies, ETL processes are evolving to accommodate hybrid environments, enabling seamless integration of on-premises and cloud data sources, thereby enhancing flexibility and scalability.

The Three Stages of ETL

Extracting Data: What Does It Mean?

The extraction stage of ETL is where data is pulled from various source systems. This may include traditional relational databases, NoSQL systems, cloud databases, and even web APIs. The main goal during this phase is to gather all necessary data, irrespective of its original format or location.

Effective extraction methods ensure minimal disruption to the source systems, allowing them to remain operational during the data extraction process. Typically, this can be achieved through incremental data loading or full data extraction, depending on the business requirements and data volume. Additionally, it's important to consider the frequency of data extraction; some organizations may require real-time data feeds, while others may operate on a daily or weekly schedule. This decision can significantly impact the overall architecture of the ETL process and the tools employed for extraction.

Transforming Data: The Crucial Step

Transformation is arguably the most complex stage of ETL. It involves a series of processes that ensure the extracted data is clean, consistent, and transformed into a format that is suitable for analysis. Data transformation activities can include filtering, aggregating, and sorting the data, as well as applying business rules to enhance its quality and relevance.

This stage may also involve data enrichment, where supplementary data is added to enhance its context. For example, merging data from various sources can provide a comprehensive view of customer behavior. Ensuring data integrity and accuracy during transformation ultimately enhances the analytical capabilities of an organization. Moreover, the transformation process often includes data validation checks to identify any anomalies or discrepancies that could skew analysis results. By implementing robust validation mechanisms, organizations can maintain high data quality standards, which is essential for making informed business decisions.

Loading Data: The Final Stage

After data has been thoroughly transformed, it enters the loading phase where it is stored in the target system, commonly a data warehouse. Efficient loading processes are crucial to ensure that data is available for reporting and analysis in a timely manner.

Loading can be done as a full load, where the entire dataset is uploaded at once, or as an incremental load, where only new or updated data is pushed to the target. Choosing the right loading strategy depends on the use case, performance needs, and the nature of the data being processed. Additionally, organizations must consider the implications of data latency and the potential impact on business intelligence tools that rely on up-to-date information. Implementing a well-structured loading process not only optimizes performance but also facilitates better data governance, ensuring that stakeholders have access to reliable and timely insights for strategic decision-making.

The Role of ETL in Business Intelligence

ETL and Data Warehousing

ETL plays a significant role within the realm of data warehousing, serving as the means to populate data warehouses with clean and organized data. By establishing an efficient ETL process, businesses can ensure that their data warehouses are continually updated and reflective of the latest operational data.

A well-designed ETL strategy supports the data warehouse architecture by enabling companies to perform complex queries and reporting, ultimately driving better business insights. Furthermore, it helps in organizing the vast amounts of data collected from different departments, aiding in the creation of a unified view of information. This integration is crucial, as it allows stakeholders to access a single source of truth, reducing the discrepancies that often arise from siloed data systems. Additionally, the ETL process can incorporate historical data, which enriches the analysis and provides context for current trends, thus enhancing decision-making capabilities.

ETL in Data Mining

Data mining, which involves discovering patterns and knowledge from large sets of data, greatly benefits from an effective ETL process. Before conducting any analytic operations or developing predictive models, organizations must first ensure that their data is accurately prepared and accessible.

ETL provides the foundational work necessary for data mining tasks, as it ensures that data is relevant, timely, and in a usable format. Through proper data preparation achieved via ETL, companies can uncover insights that inform strategic decisions. Moreover, the ETL process can include data validation and cleansing steps that enhance the quality of the data being analyzed. This is particularly important in industries such as finance and healthcare, where data integrity is paramount. By ensuring that the data is not only clean but also enriched with additional context, organizations can apply more sophisticated algorithms in their data mining efforts, leading to deeper insights and more accurate predictions.

ETL Tools and Technologies

Overview of ETL Tools

There is a wide array of ETL tools available in the marketplace, ranging from open-source solutions to proprietary software. Tools such as Apache Nifi, Talend, and Informatica PowerCenter are popular choices among organizations looking for robust ETL capabilities. Each of these tools offers unique features tailored to different business needs, making it essential for organizations to carefully assess their requirements before making a selection.

These tools often come equipped with user-friendly interfaces and features like data mapping, workflow automation, and monitoring capabilities, enhancing the overall ETL process. Automation allows organizations to streamline their ETL workflows, thereby reducing the potential for human error while increasing operational efficiency. Additionally, many modern ETL tools support cloud integration, enabling businesses to leverage the scalability and flexibility of cloud computing for their data processing needs.

Selecting the Right ETL Tool for Your Needs

Choosing the right ETL tool requires a thorough evaluation of business needs, technical requirements, and budget constraints. Key factors to consider include the supported data sources, scalability, ease of use, and the ability to handle real-time data integration. Organizations should also evaluate the tool's performance in processing large volumes of data, as this can significantly impact data analytics and reporting capabilities.

It is also important to assess the tool's community support and documentation, as having a robust support network can help resolve potential issues during implementation and execution. Furthermore, organizations should consider the tool's compatibility with existing systems and technologies, as seamless integration can lead to a more efficient data ecosystem. Ultimately, selecting the right ETL tool can significantly improve the efficiency and effectiveness of an organization's data management strategy, enabling better decision-making and insights derived from their data assets.

The Challenges and Solutions in ETL Process

Common ETL Challenges

Despite its importance, the ETL process is not without challenges. Common issues include handling large volumes of data, ensuring data quality, and managing the complexity of diverse data sources. Organizations may also face difficulties in maintaining performance during peak loads, which can hinder data processing capabilities.

Moreover, data governance and compliance challenges arise, particularly when dealing with sensitive data. Organizations must ensure that they are meeting regulatory requirements throughout the ETL process.

Effective Solutions for ETL Issues

To address the common challenges associated with ETL, organizations can adopt various strategies. Implementing data quality tools that monitor and clean data throughout the ETL process can enhance data reliability. Additionally, using scalable cloud-based ETL solutions can help manage large volumes of data efficiently.

Employing automation and orchestration tools further alleviates workload, ensuring efficient performance even during heavy data loads. Establishing a strong data governance framework can tackle compliance issues by ensuring that data is handled per regulations. Through careful planning and the right tools, organizations can overcome ETL challenges and achieve their data management objectives.

Ready to elevate your ETL processes and empower your data-driven decision-making? Look no further than CastorDoc. With its advanced governance, cataloging, and lineage capabilities, coupled with a user-friendly AI assistant, CastorDoc is the ultimate tool for businesses seeking to enable self-service analytics. Whether you're a data professional looking to streamline data governance or a business user aiming to harness data insights, CastorDoc is designed to support your goals. Try CastorDoc today and unlock the full potential of your data, making every aspect of ETL work for you.

You might also like

Get in Touch to Learn More

“[I like] The easy to use interface and the speed of finding the relevant assets that you're looking for in your database. I also really enjoy the score given to each table, [which] lets you prioritize the results of your queries by how often certain data is used.” - Michal P., Head of Data

.png)

%202.png)

%202.png)

%202.png)

%202.png)

%202.png)

%202.png)

%202.png)

%202.png)

.png)